Deepwave Digital has just released a comprehensive workflow toolbox for creating, training, optimizing, and deploying a neural network on the AIR-T. This new deployment toolbox works natively on the AIR-T and AirStack without the need to install any new packages or applications. This means that the workflow for an AI enabled radio frequency (RF) system has never been simpler. Now you can deploy an existing TensorFlow model on the AIR-T in less than one minute.

Read more below or here is a link to the code-base that runs natively on any of the AIR-T Embedded Series models.

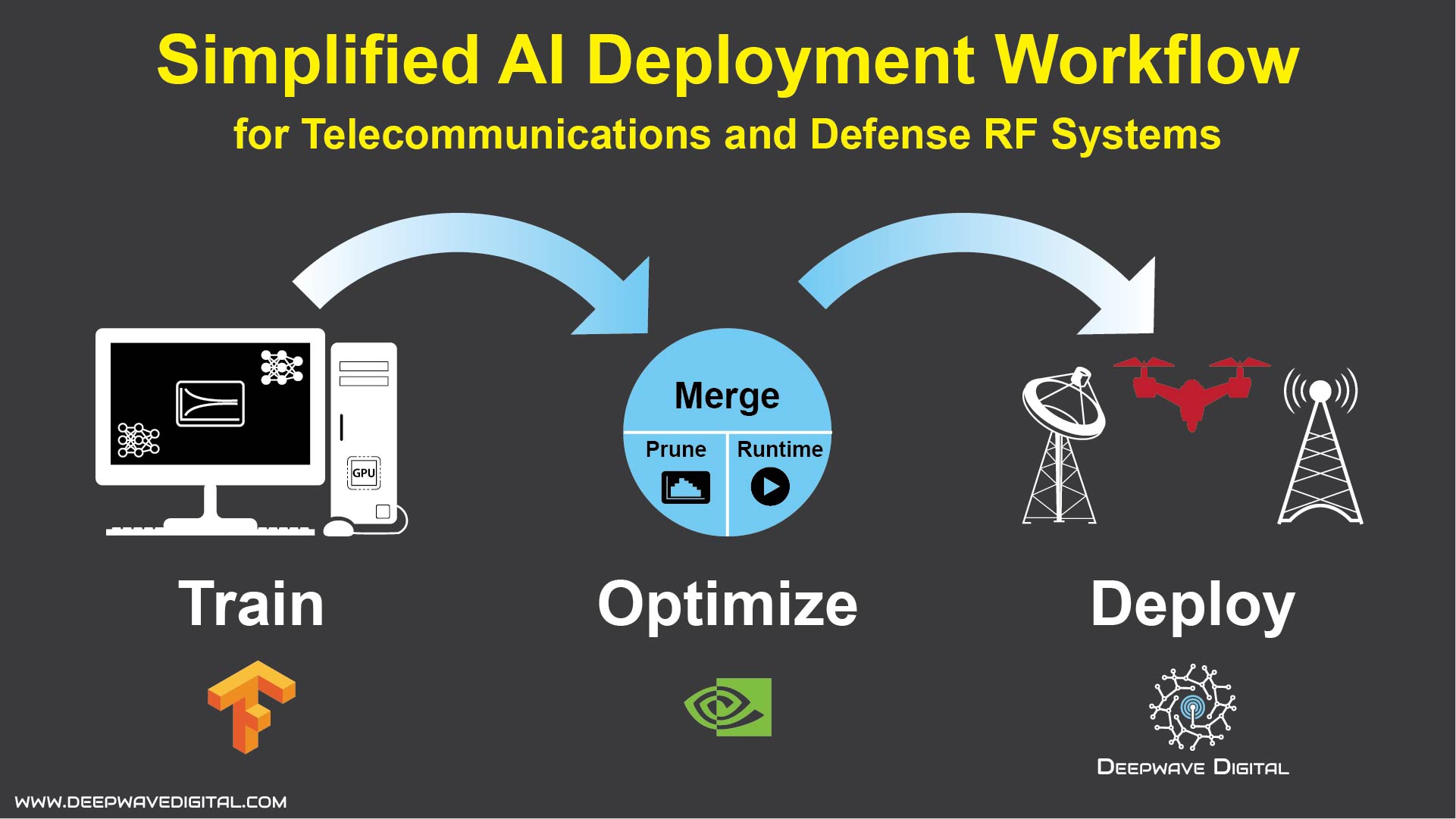

Training to Deployment Workflow

The figure above outlines the workflow for training, optimizing, and deploying a neural network on the AIR-T. All python packages and dependencies are included on the AirStack 0.3.0+, which is the API for the AIR-T.

Step 1: Train

To simplify the process we provide an example TensorFlow neural network that performs a simple mathematical calculation instead of being trained on data. This toolbox provides all of the necessary code, examples, and benchmarking tools to guide the user in the training to deployment workflow. The process will be the exact same for any other trained neural network.

Step 2: Optimize

Optimize the neural network model using NVIDIA's TensorRT. The output of this step is a file containing the optimized network for deployment on the AIR-T.

Step 3: Deploy

The final step is to deploy the optimized neural network on the AIR-T for inference. This toolbox accomplishes this task by leveraging the GPU/CPU shared memory interface on the AIR-T to receive samples from the receiver and feed the neural network using Zero Copy, i.e., no device-to-host or host-to device copies are performed. This maximizes the data rate while minimizing the latency.

For more information, check out the open source toolbox here that runs natively on any of the AIR-T Embedded Series models.