If you are considering the AIR-T for your wireless machine learning, digital signal processing, or high-performance computing application, you will need to know how to program it. The AIR-T is designed to reduce the number of and effort of engineers required to create an intelligent wireless system. Programming the AIR-T is simple and streamlined.

Ideally you will have knowledge of how your AI algorithm works and be able to program in a language like Python. You won’t, however, have to worry about buffering, threading, talking to registers, etc. For power users or those wanting to get every last percentage of performance out of the device, various drivers and hardware abstraction APIs are provided so you can write your own custom application.

If you are unfamiliar with deep learning algorithms, the process is typically broken up into two stages: training and inference. Rather than rewriting the great online references covering these processes, here is an excellent blog that discusses training and inference and here is a great introduction to how neural networks operate.

The AIR-T is designed to be an edge-compute inference engine for deep learning algorithms. Therefore, a trained algorithm will be necessary to use the AIR-T for deep learning deployment. You will either need to create and train one yourself, contract with Deepwave to create one, or obtain one through a third party.

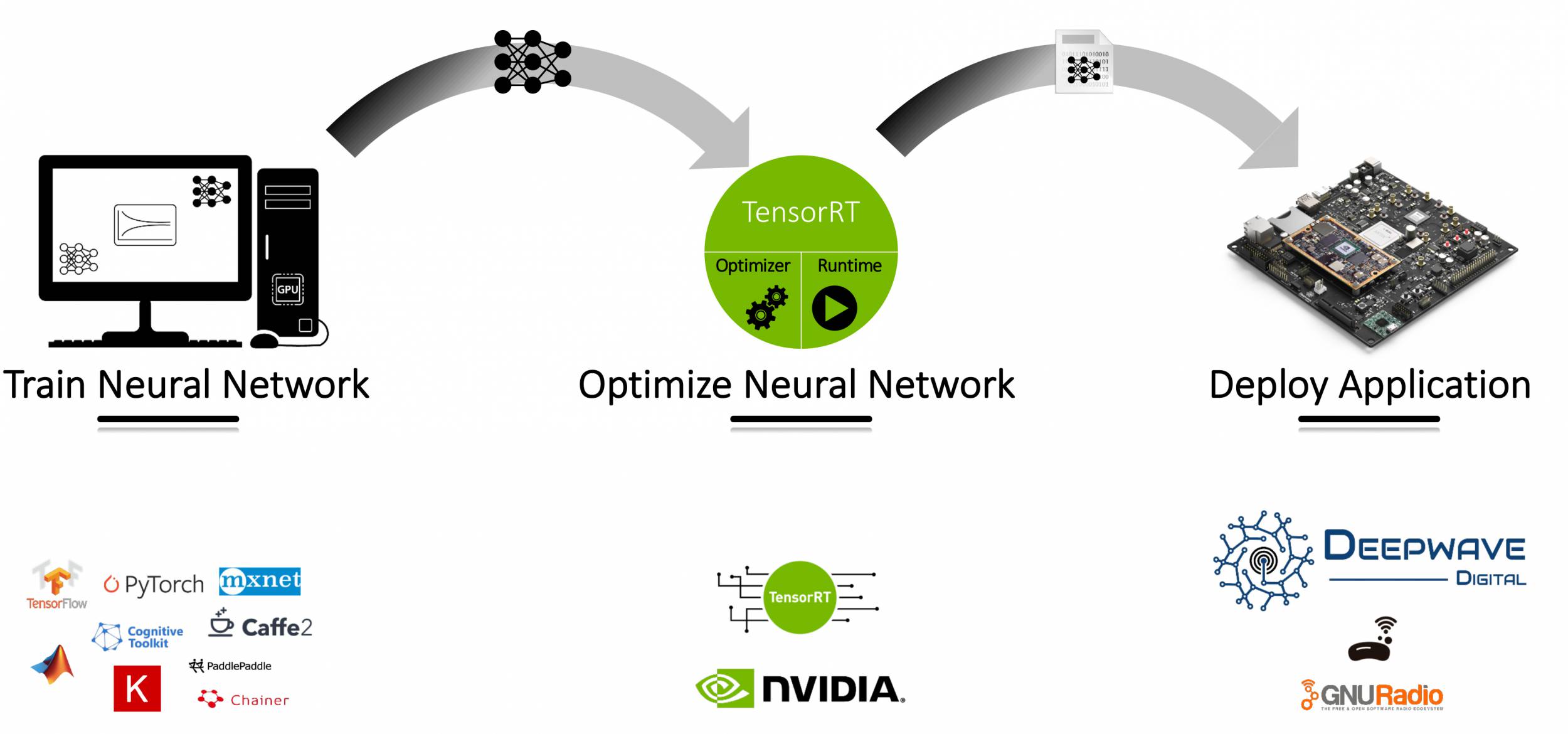

The easiest way to execute a deep learning algorithm on the AIR-T is to use NVIDIA’s TensorRT inference accelerator software. By providing support through our strategic partner, NVIDIA, we enable you to deploy AI algorithms trained in TensorFlow, MATLAB, Caffe2, Chainer, CNTK, MXNet, and PyTorch. The procedure is outlined in the following flowchart, where a deep neural network (DNN) is used. Note that if you are using an algorithm that is already trained, the first two steps will be bypassed.

While all of this may seem new to you, we assure you that the examples provided with the AIR-T will be more than enough to get you up and running quickly.

With the power of deep learning incorporated into wireless technology, the number of engineering hours required to build complex RF system is significantly reduced. For example, the Deep Learning Signal Classifier shown below took approximately 6 hours to train on a single NVIDIA GP100 GPU, resulting in near perfect signal classification. For more information on this classifier, see our blog post.