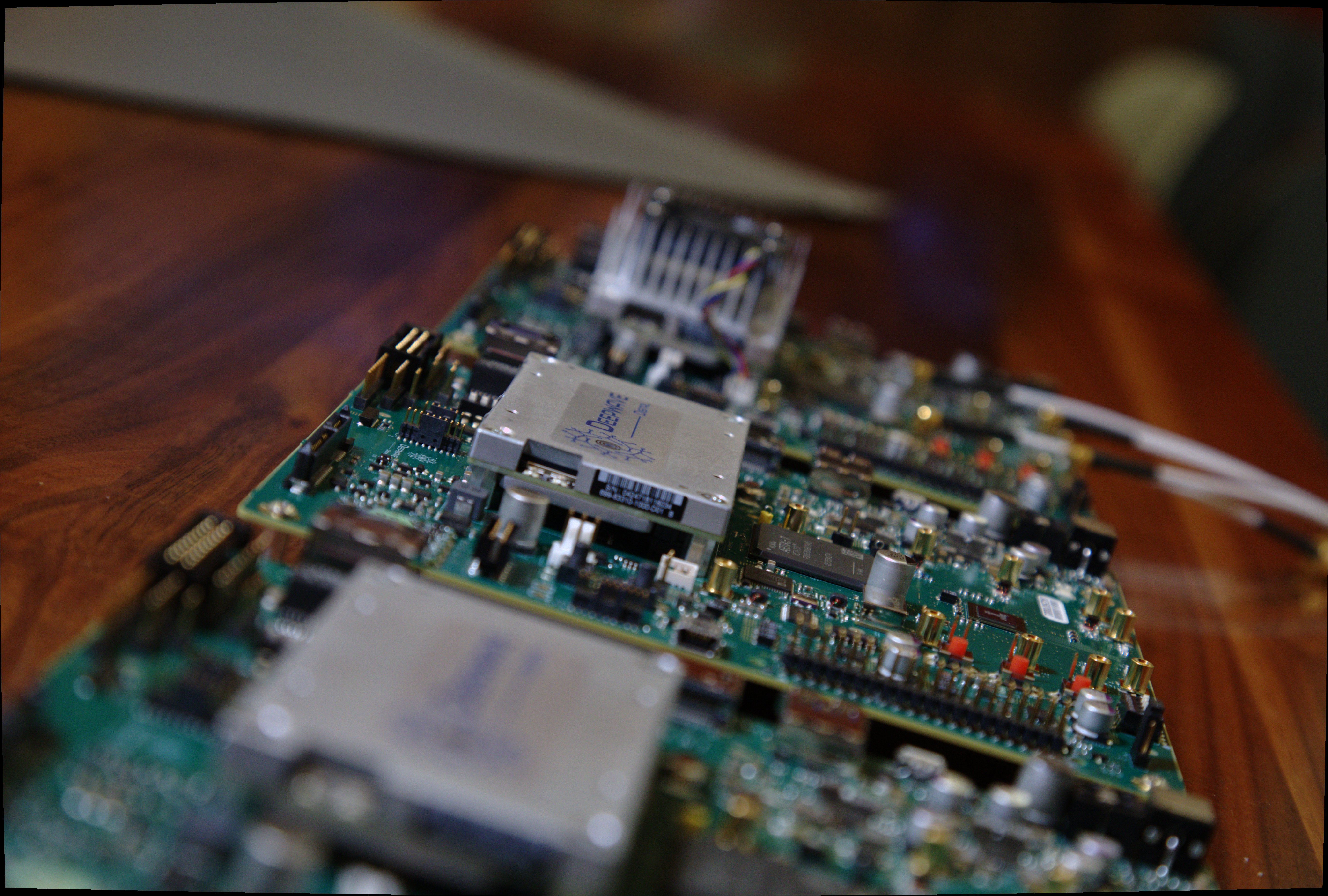

We are excited to announce that AirStack Version 0.3.0 is now available for download in the Developer Portal for the AIR-T. The Deepwave team has been working very hard to bring this update to the community and believe that these new features will allow customers to create new and exciting applications for deep learning on the AIR-T. This software version enables dual channel continuous transmit (2x2 MIMO), signal stream triggering, and a new Python FPGA register API.

New Features in AirStack 0.3.0

-

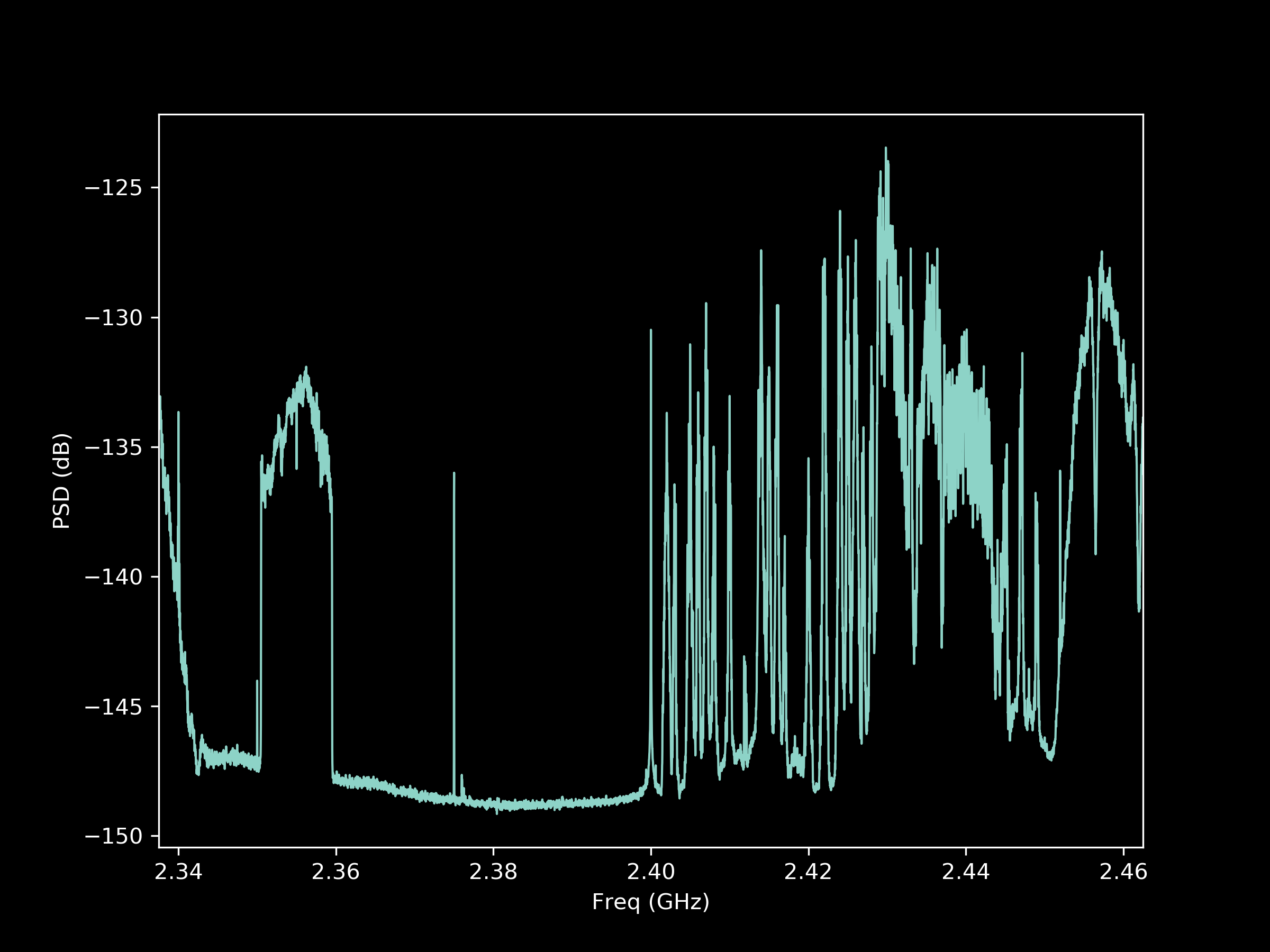

Enabled TX functionality - You can now continuously transmit waveforms using the AIR-T. We currently support all the same features on the transmit channels as we do on the receive channels. For an example of how to transmit a continuous signal with the AIR-T, see the tutorial Transmitting on the AIR-T.

-

Hardware Triggering - We have added support to the RX channels to allow for synchronization of signal reception. Multiple AIR-Ts can now begin receiving signals at the exact same time and also be synchronized with external equipment. To learn how to use this feature, take a look at the tutorial Triggering a Recording.

-

New FPGA Register API - We now allow for direct user access to the FPGA registers via the SoapySDR Register API. Most users won’t necessarily need this functionality, but advanced users that are working with their own custom firmware now have a simplified way to control the FPGA functionality using C++ or Python.

-

Customized Memory Management - We have completely reworked memory management in the DMA driver. You can now custom tailor DMA transfers to/from the FPGA based on your requirements for latency and throughput.

- The overall size of the per-channel receive buffer is now tunable via a kernel module parameter in Deepwave's the AXI-ST DMA driver. The default size is 256 MB per channel, which is unchanged from prior versions and buffers a half second of data at 125 MSPS. This can be decreased to reduce memory overhead or increased if required by the user application.

- The size of each unit of data transfer, called a descriptor, is now tunable via a kernel module parameter. This is intended for advanced users. Larger descriptors allow for improved performance, especially when using multiple radio channels at the same time. Smaller descriptors can help to optimize for latency, particularly at lower sample rates. These effects are small but may be useful for some specific applications.

- Device Details Query - The SoapySDR API and command-line utilities now report the versions of various software and hardware components of the AIR-T. This will make it easy to ensure the various components of AirStack are up to date.

For more information on Deepwave Digital please reach out:

_300x300.jpg)